GOOGLE SEARCH AI

AI is the future of Google Search. However, not in the way you think. The web search company isn't all in on chatbots (though it is developing one called Bard), and it isn't redesigning its homepage to look more like a ChatGPT-style messaging system. Instead, Google is putting AI front and centre in the internet's most valuable real estate: its existing search results.

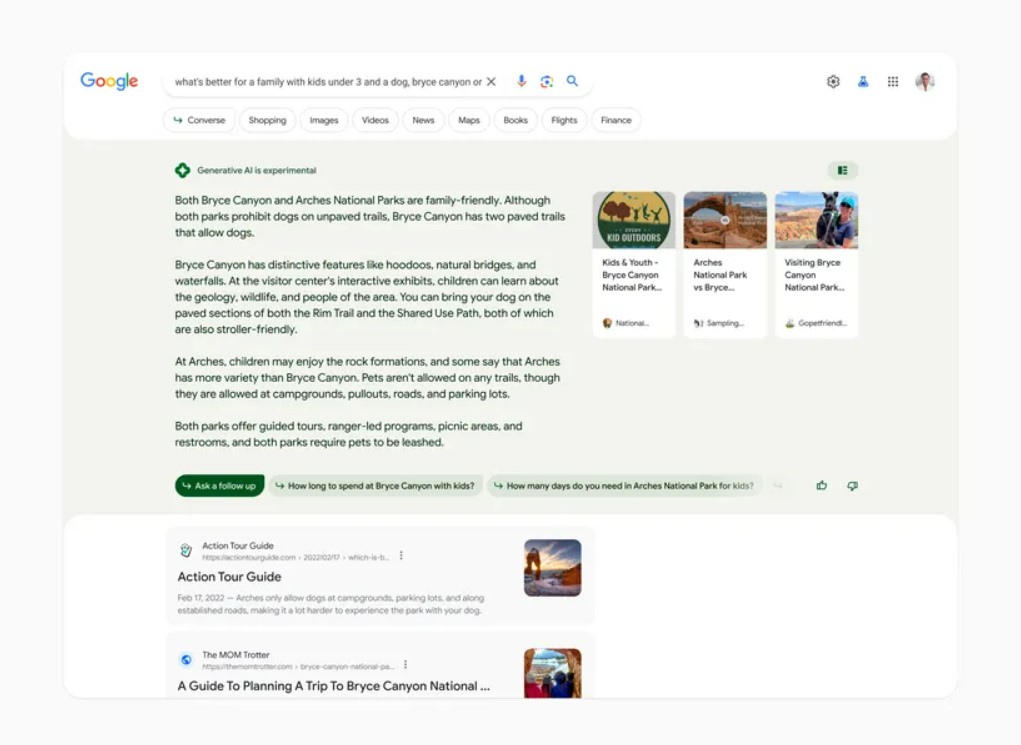

Liz Reid, Google's VP of Search, opens her laptop and begins typing into the Google search box to demonstrate. "Why is sourdough bread still so popular?" she writes before pressing the enter key. Google's standard search results appear almost instantly. A rectangular orange section above them pulses and glows, displaying the phrase "Generative AI is experimental." After a few seconds, the glowing is replaced by an AI-generated summary: a few paragraphs describing how good sourdough tastes, the benefits of its prebiotic properties, and more. To the right, there are three links to websites that Reid claims "corroborates" the information in the summary.

Google refers to this as a "AI snapshot." All of it is generated by Google's large language models and sourced from the open web. Reid then moves his mouse to the top right corner of the box and clicks "the bear claw," which resembles a hamburger menu with a vertical line to the left. The bear claw opens a new view: the AI snapshot is now divided into sentences, with links to the sources of the information for each sentence underneath. This, Reid emphasises, is corroboration. And she believes it is crucial to how Google's AI implementation differs. "We want [the LLM], when it says something, to tell us as part of its goal: what are some sources to read more about that?"

Google's search results page has a new look. It's AI-first, colourful, and unlike anything you've seen before. It's powered by some of Google's most advanced LLM research to date, such as a new general-purpose model called PaLM2 and the Multitask Unified Model (MuM), which Google uses to understand multiple types of media. It's often extremely impressive in the demos I've seen. And it alters the way you search, particularly on mobile, where that AI snapshot frequently consumes the entire first page of results.

There are some restrictions: in order to access these AI snapshots, you must first opt in to a new feature called Search Generative Experience (SGE), which is part of a larger feature called Search Labs. Not all searches will result in an AI response — the AI appears only when Google's algorithms believe it will be more useful than standard results, and sensitive subjects such as health and finances are currently set to avoid AI interference entirely. However, it appeared in my brief demos and testing whether I searched for chocolate chip cookies, Adele, nearby coffee shops or the best films of 2022. AI may not kill the ten blue links, but it certainly pushes them down the page.

Google executives have repeatedly told me that SGE is an experiment. However, they are clear that they see it as a long-term foundational change in the way people search. AI adds another layer of input, allowing you to ask better and more detailed questions. It also adds another layer of output that is intended to both answer your questions and lead you to new ones.

An opt-in box at the top of search results may appear to be a minor step from Google in comparison to Microsoft's AI-first Bing redesign or ChatGPT's complete newness. However, SGE is only the first step in a major overhaul of how billions of people find information online — and how Google makes money. As far as pixels on the internet go, these are the most important.

Questioned and answered

Google is pleased with the state of its search results. We've moved on from the "10 blue links" era of Google, when you typed in a box and got links back. You can now search by asking questions aloud or taking a picture of the world, and you could get everything from images to podcasts to TikToks in return.

These results already serve many searches well. You're already good to go if you go to Google and search "Facebook" to get to facebook.com or the height of the Empire State Building.

However, there are some queries for which Google has never quite worked, and this is where the company is hoping AI can help. "Where should I go in Paris next week?" and "What's the best restaurant in Tokyo?" These are difficult questions to answer because they are not one in the same. What is your financial situation? When are all the museums in Paris open? How long are you prepared to wait? Do you have any children with you? On and on it goes.

"The bottleneck turns out to be what I call 'the orchestration of structure,'" explains Prabhakar Raghavan, Google's SVP in charge of Search. Much of that data is available on the internet or even within Google — museums post hours on Google Maps, people leave reviews about restaurant wait times — but putting it all together into something resembling a coherent answer is extremely difficult. "People want to say, 'plan me a seven-day vacation,'" Raghavan explains, "and they believe that if the language model outputs, it must be correct."

One way to consider these is as questions with no correct answer. A large percentage of people who use Google aren't looking for information that already exists somewhere. They're looking for ideas and want to experiment. And, since there's probably no internet page titled "Best vacation in Paris for a family with two kids, one of whom has peanut allergies and the other loves football, and you definitely want to go to the Louvre on the quietest possible day of the week," the links, podcasts, and TikToks won't be much help.

Large language models, which are trained on a massive corpus of data from all over the internet, can help answer those questions by essentially running many disparate searches at once and then combining that information into a few sentences and a few links. "A lot of times, you have to take a single question and break it down into 15 questions," Reid says, to get useful information from search. "May I just ask one?" "How do we alter the way information is organised?"

That's the idea, but Raghavan and Reid are quick to point out that SGE still struggles with completely creative acts. It will be much more useful right now for synthesising all the search data behind questions like "what speaker should I buy to take into the pool?" It'll also do well with "what were the best films of 2022," because it has some objective Rotten Tomatoes data to draw from, in addition to the internet's many rankings and blog posts on the subject. Google appears to be becoming a better information-retrieval machine as a result of AI, even if it isn't quite ready to be your travel agent.

What was missing from the majority of SGE demos? Ads. Google is still experimenting with how to incorporate ads into AI snapshots, but rest assured, they are on their way. For any of this to stick, Google will need to monetize the hell out of AI.

Google's Bot

During our demonstration, I asked Reid to search only for the word "Adele." The AI snapshot included what you'd expect — some information about her past, her accolades as a singer, and a note about her recent weight loss — and then added that "her live performances are even better than her recorded albums." Google's AI has thoughts! Reid quickly clicked the bear claw and attributed that sentence to a music blog, but he also admitted that this was a system failure.

Google's search AI is not meant to form opinions. It is not permitted to use the word "I" when responding to questions. Unlike Bing's multiple personalities, ChatGPT's chipper assistant, or even Bard's entire "droll middle school teacher" vibe, Google's search AI is not attempting to appear human or affable. It is actually attempting to avoid being those things. "You want the librarian to really understand you," says Reid. "But, most of the time, when you go to the library, your goal is for them to help you with something, not for them to be your friend." That's the vibe Google wants to convey.

The reason for this is more than just that strange itchy sensation you get after talking to a chatbot for too long. And it doesn't appear that Google is simply trying to avoid super horny AI responses. It's more of a recognition of the current situation: large language models are suddenly everywhere, they're far more useful than most people would have predicted, and yet they have a worrying tendency to be confidently wrong about almost everything. People will believe the wrong things if that confidence comes in perfectly formed paragraphs that sound good and make sense.

Several executives I spoke with mentioned a conflict in artificial intelligence between "factual" and "fluid." You can create a system that is factual, in the sense that it provides you with a lot of useful and well-founded information. You can also create a system that is fluid and feels completely natural. Perhaps one day you'll be able to have both. But, for the time being, the two are at odds, and Google is working hard to lean towards the factual. According to the company, it is preferable to be correct rather than interesting.

Google exudes confidence in its ability to be factually sound, but recent history suggests otherwise. Not only is Bard less wacky and entertaining than ChatGPT or Bing, but it is also frequently less correct — it makes basic errors in math, information retrieval, and other areas. The PaLM2 model should help with some of that, but Google hasn't solved the "AI lies" problem by any means.

There's also the issue of when AI should appear. The snapshots should not appear if you ask sensitive medical questions, or if you intend to do something illegal or harmful, according to Reid. However, AI may or may not be useful in a wide range of searches. If I search "Adele," having some basic summary information at the top of the results page is helpful; if I search "Adele music videos," I'm much more likely to want only the YouTube videos in the results.

Reid claims that Google can afford to be cautious here because the fail state is simply Google search. So, whenever the snapshot should not appear, or when the model's confidence score is low enough that it may not be more useful than the top few results, it's simple to do nothing.

Courageous and responsible

SGE feels incredibly conservative in comparison to the splashy launch of the new Bing or the breakneck development pace of ChatGPT. It's a free, no-nonsense tool that compiles and summarises your search results. Is it enough for Google to be in an existential crisis because AI is changing the way people interact with technology?

Two executives used the same phrase to describe the company's strategy: "bold and responsible." Google understands the importance of moving quickly — not only are chatbots gaining popularity, but TikTok and other platforms are stealing some of the more exploratory search out from under Google. However, it must avoid making mistakes, providing incorrect information, or creating new problems for users. That would be a PR disaster for Google, would give people even more reasons to try new products, and would potentially destroy the business that helped Google become a trillion-dollar company.

So, for the time being, SGE is opt-in and personality-free. Raghavan is content to play a longer game, stating that "knee-jerk reacting to some trend is not necessarily going to be the way to go." He is also convinced that AI is not a panacea that will change everything, and that in ten years, we will all be doing everything through chatbots and LLMs. "I think it's going to be one more step," he says. "It's not as if the old world vanished. And we're in a completely different world.'"

Google Bard, in other words, is not the future of Google search. However, AI is. Over time, SGE will emerge from the labs and into the search results of billions of users, mingling generated data with links to the web. It will affect Google's business and, most likely, the way the internet works. If Google gets it right, it will exchange ten blue links for all of the internet's knowledge, all in one place. And, hopefully, being truthful.

Post a Comment